Knowledge Discovery Efficiency (KEDE) and Ashby's Law of Requisite Variety

Abstract

We address Real-world applications of Ashby's Law by adopting Ashby's strict black-box perspective: only external behaviour is observable. First we define the multi-staged selection process of narrowing down and selecting the appropriate response from the set of alternative responses as the Knowledge Discovery Process. We then label H(X|Y) as the knowledge to be discovered, which is the gap in internal variety that had to be compensated by selection. This quantifies how much disorder the regulator still permits and, conversely, how close the system comes to meeting Ashby's requisite-variety condition. In information-theoretic terms, perfect regulation requires H(X|Y) = 0. Then we quantify the knowledge to be discovered H(X|Y) based on the observable outcomes E. Building on this result, we generalize Knowledge-Discovery Efficiency (KEDE) - scalar metric that quantifies how efficiently a system closes the gap between the variety demanded by its environment and the variety embodied in its prior knowledge. KEDE operationalises requisite variety when internal mechanisms remain opaque, offering a diagnostic tool for evaluating whether biological, artificial, or organisational systems absorb environmental complexity at a rate sufficient for effective regulation. Finally we present applications of KEDE in diverse domains, including typing the longest English word, measuring software development, testing intelligence, basketball game, assembling furniture, and speed of light in medium.

1. Introduction

The Law of Requisite Variety, formulated by W. Ross Ashby, states that for a system to effectively regulate its environment, it must have at least as much variety/complexity as its environment. This principle is foundational in disciplines such as cybernetics, control theory, and machine learning.

The concept of requisite variety has since been applied across diverse domains, including organizational theory, ecology, and information systems. It underscores the necessity for systems to adapt to environmental complexity in order to maintain stability and achieve intended outcomes.

Real-world attempts to apply Ashby's Law of Requisite Variety face three persistent obstacles. (i) Combinatorial explosion: enumerating all relevant states of a system and its environment quickly becomes intractable, especially when hidden or unmeasured variables are present. (ii) Dual control dilemma: a regulator must simultaneously amplify its own control variety and attenuate external variety—an optimization that is delicate in multiscale, hierarchical, and time-varying settings such as digital ecosystems or military command structures. (iii) Resource constraints: limited data, computational power, and organisational capacity often preclude sophisticated control architectures. Existing remedies—markup-language state catalogues, iterative multidimensional sampling, and distributed self-organising controllers—mitigate but do not eliminate these limitations.

In section 2, we provide a detailed overview of Ashby's Law of Requisite Variety, including its mathematical formulation and implications for system regulation. We also discuss the present day understanding of residual variety and its significance in the context of Ashby's Law. In section 3, we discuss the challenges of applying Ashby's Law to real-world systems, including combinatorial explosion, dual control dilemma, and resource constraints. We propose a solution to these challenges by treating the system as a black box, observing probability of successful outcomes to disturbances, and estimate the gap in its internal variety based on that. In section 4, we start establishing the solution by introducing the Knowledge Discovery Process, which is narrowing down and selecting the appropriate response from its set of alternative responses. We then label H(X|Y) as the knowledge to be discovered, which is the gap in internal variety that had to be compensated by selection. In section 5, we show how to quantify the knowledge to be discovered H(X|Y) based on the observable outcomes E. In section 6, we generalize the Knowledge-Discovery Efficiency (KEDE) - scalar metric that quantifies how efficiently a system closes the gap between the variety demanded by its environment and the variety embodied in its prior knowledge. Finally, in section 7, we explore applications of KEDE in various domains, demonstrating its utility as a diagnostic tool for evaluating system performance and adaptability.

2. The Law of Requisite Variety

In practice, the question of regulation usually arises in this way: The essential variables E are given, and also given is the set of states S in which they must be maintained if the organism is to survive (or the industrial plant to run satisfactorily). These two must be given before all else. Before any regulation can be undertaken or even discussed, we must know what is important and what is wanted. ... It is assumed that outside considerations have already determined what is to be the goal, i.e. what are the acceptable states S. Our concern...is solely with the problem of how to achieve the goal in spite of disturbances and difficulties[1].

Given a set of elements, its variety is the number of elements that can be distinguished. Thus the set {g b c g g c } has a variety of 3 letters. Variety comprises any attribute of a system capable of multiple 'states' that can be made different or changed.

The Law of Requisite Variety, formulated by W. Ross Ashby states that:

For a system to effectively regulate its environment, it must have at least as much variety as its environment

Ashby's Law is held true across the diverse disciplines of informatics, system design, cybernetics, communications systems and information systems.

Mathematical Formulation

In Ashby's terms, we can think of the system as a transducer:

Where:

- D be the set of disturbances, representing the possible states of disturbances that a system may experience.

- R be the set of responses, representing the possible regulatory actions that counteract disturbances.

- O be the set of realized outcomes, representing the possible outcomes that can result from the disturbance-response pairs (D, R).

-

E be the subset of acceptable outcomes, representing the values / essential-variable states that the system aims to achieve, induced by a valuation mapping

v : O → E. E may be as simple as the 2-element set {good, bad}, and is commonly an ordered set. Some subset of E can be defined as the “goal”[8].

In the deterministic pay-off-matrix case, the environment is the fixed function T : D x R → O, and evaluation is v : O → E.

If D and R are treated as random variables over episodes, then the induced conditional distribution is

P(E | D, R) determined by E = v(T(D,R)).

In a noisy environment, replace T by a channel P(O | D, R).

This can be represented as a Table of Outcomes (T) where:

- T be the pay-off matrix, i.e. a fixed mapping

T : D x R → Oor the fixed transition rule of the environment. - Rows represent disturbances D

- Columns represent regulatory responses R

- Entries represent realized outcomes O = {o11, o12, o13, ..., o21, o22, o23, ..., o31, o32, o33, ..., ...} in the cells of the matrix,

O = {o} = T(d,r) ∈ O, where d is a disturbance and r is a regulatory response.

| T | R | ||||

|---|---|---|---|---|---|

| r₁ | r₂ | r₃ | ... | ||

| D | d₁ | o₁₁ | o₁₂ | o₁₃ | ... |

| d₂ | o₂₁ | o₂₂ | o₂₃ | ... | |

| d₃ | o₃₁ | o₃₂ | o₃₃ | ... | |

| d₄ | o₄₁ | o₄₂ | o₄₃ | ... | |

| ... | ... | ... | ... | ... | |

The Table of Outcomes (T) is a fixed, structured space of possibilities, or the fixed transition rule of the environment, which directly determines the essential variables E.

In simplified analyses, O and E are sometimes conflated, but they are conceptually distinct.

In the deterministic pay-off-matrix case, the environment is the fixed function T : D x R → O, and evaluation is v : O → E.

If D and R are treated as random variables over episodes, then the induced conditional distribution is

P(E | D, R) determined by E = v(T(D,R)).

The regulator's accumulated structure M is its learned law of action.

In the deterministic case, M : D → R.

In general (learning, uncertainty), treat it as a policy P(R | D), i.e. how probability mass is allocated across responses for each disturbance.

Across time, the learning object M is not fixed. If the table O is the space of possibilities, then M is the mechanism that induces a measure over possible paths through that space.

Learning and rework do not alter the structure of the Table of Outcomes T. Instead, they modify the regulator's accumulated structure M. Changes in M alter which disturbance-action pairs become more or less probable and therefore how the system's realized trajectories are distributed over the table O.

Rework corresponds to a revision accumulated structure M: probability mass is shifted away from previously selected or ineffective responses and reassigned to alternative responses for the same disturbance. Thus, the Table of Outcomes (T) remains a fixed space of possibilities, while learning and rework change only the regulator's induced distribution of trajectories through that space, not the space itself.

Each input from D is transformed via selection into a response

,

which is then processed to produce an outcome

.

which is then evaluated as

,

which is the essential variable that the system aims to achieve, induced by a valuation mapping v : O → E.

If R does nothing, i.e. keeps to one value, then the variety in D threatens to go through O to E, contrary to what is wanted. It may happen that O, without change by R, will block some of the variety and occasionally this blocking may give sufficient constancy at E. More commonly, a further suppression at E is necessary; it can be achieved only by further variety at R.

Under idealized assumptions, Ashby showed that a lower bound on the achievable variety in the outcomes is given by the smallest variety that can be achieved in the set of actual outcomes cannot be less than the quotient of the number of rows divided by the number of columns.

Where:

- V(D) = variety in the disturbances

- V(R) = variety in the regulatory responses

- V(E) = variety in the outcome (essential variables)

This inequality shows that the variety in the outcomes cannot be reduced below the division between the variety in the disturbances and the variety in the regulatory responses.

Perfect regulation here means deterministic essential outcome, not merely acceptable performance. For perfect regulation, we need V(E) to be stable or close to 1 (only one possible outcome):

The law reflects a fundamental insight: control is about the ratio of disturbances to regulatory responses, not just their arithmetic difference.

Information-Theoretic Formulation

All acts of regulation can be related to the concepts of communication theory by noticing that the “disturbances” correspond to noise, and the “goal” is a message of zero entropy, because the target value E is constant. Thus, the law of Requisite Variety says that R 's capacity as a regulator cannot exceed R 's capacity as a channel of communication.

The variety is measured by the logarithm of its value. If the logarithm is taken to base 2, the unit is the bit of information. In practice, we use the Shannon information entropy, denoted by H. For a quantifiable variable, entropy is just another measure of variance.

Applying this to Ashby's Law we get:

If we assume equiprobable disturbances/responses and treat variety as cardinality, then H reduces to , yielding:

Ashby's law can thus be reformulated clearly:

The information-processing capacity (entropy) of a control system must be at least as large as the information (entropy) in the system it regulates.

Ashby's Law can be interpreted as a cybernetic analogue of Shannon's "Noisy channel coding theorem" which states that communication through a channel that is corrupted by noise may be restored by adding a correction channel with a capacity equal to or larger than the noise corrupting that channel. The disturbance D, which threatens to get through to the outcome E, clearly corresponds to the noise; and the correction channel is the system R, which is supposed to restore the outcome E[8].

It has been shown shown that the law of requisite variety can be extended to include knowledge or ignorance by simply adding this conditional uncertainty term[31] When buffering is present, part of the environmental variety is absorbed passively before reaching the regulator. This reduces the effective disturbance entropy by an amount K, which is the buffering capacity:

Where:

- H(E) is the residual variety i.e. the realized essential-variable distribution, not the size of the set E

- H(R) is the entropy of the regulator, representing its information-processing capacity.

- H(D) is the entropy of the disturbances, representing the complexity of the environment.

- H(R|D) is the conditional entropy of the regulator given disturbances, representing the lack of requisite knowledge i.e. the ignorance of the regulator about how to react correctly to each appearance of a disturbance D. Only a regulator that knows how to use the available regulatory variety H(R) to react correctly to each disturbance D will reach the optimal result of regulation,

- K is the buffering capacity measured in bits of disturbance variety absorbed before reaching the regulator. Buffering is the passive absorption or damping of disturbances i.e. the amount of noise that a system can absorb without requiring an active regulatory response.

A necessary but not sufficient condition for effective control is: . Sufficiency additionally requires

Successful (essential) outcomes E do not depend solely on the variety of responses H(R) available to a regulator R; the system must also know which response to select for a given disturbance. Effective compensation of disturbances requires that the system possess the ability to map each disturbance to an appropriate response from its repertoire. The absence or incompleteness of such knowledge can be quantified using the conditional entropy H(R|D)[31]. In other words, H(R|D) measures how much the regulator R lacks the requisite knowledge to match responses to disturbances. In the absence of such requisite knowledge, the system would have to select responses, until eliminating all disturbances. Thus, merely increasing the response variety H(R) is not sufficient; it must be complemented by a corresponding increase in selectivity, that is, reduction in H(R|D) i.e. increasing knowledge. H(R|D) = 0 represents the case of no uncertainty or complete knowledge, where the action is completely determined by the disturbance. This requirement may be called the law of requisite knowledge[29].

H(R|D) reminds us that response alone is not sufficient: if the regulator does not know which response is appropriate for the given disturbance, it can only try out regulatory actions at random, in the hope that one of them will be effective and that none of them would make the situation worse. The larger the H(R|D), the larger the probability that the regulator would choose a wrong regulatory response, and thus fail to reduce the variety in the outcomes H(E). Therefore, this term H(R|D) has a “+” sign in the inequality: more uncertainty (less knowledge) produces more variation in the essential variables E[54].

To achieve control, the regulator R must possess sufficient information-processing capacity (entropy) such that the following is achieved:

In other words, the complexity of the environment D can not exceed that of the system R, which means the system R fully matches the environment D[7].

Since the law simplifies to:

The mutual information I(R:D) represents the requisite knowledge of the regulator R about how to react correctly to each disturbance D, i.e. the amount of regulatory variety that is effectively correlated with and therefore absorbs the variety in the disturbances. Such knowledge may be realized structurally as the regulator's learned law of action M represented by a mapping M: D → R , by which disturbances are mapped to regulatory responses[54]. The mutual information I(R:D) quantifies how much of the regulator's learned law of action M: D → R effectively couples disturbances to responses, while the remaining uncertainty H(R|D) quantifies the lack of requisite knowledge[29].

3. Core challenges in applying Ashby's Law to real systems

We conducted a literature review aimed at identifying the primary challenges and limitations associated with applying Ashby's Law in real-world systems.

A central challenge that emerges is the measurement of variety. In most of the reviewed literature, the concept of variety is either poorly defined or not explicitly measured, resulting in ambiguity and potential misinterpretation of the law's implications. Key obstacles to effective measurement include:

- The direct measurement of variety is fundamentally incomputable for all but the simplest systems [14].

- Hidden variables introduce uncertainty and complicate measurement efforts [15].

- Trade-offs often arise between variety at different scales [16].

- A combinatorial explosion occurs when attempting to enumerate all possible system states [15,16].

- Resource limitations constrain the feasibility of comprehensive measurement [20].

- Environmental complexity is frequently “unknowable,” preventing complete assessment [25].

- Most studies lack explicit or standardized methods for quantifying variety [14,17'-20,25,27].

- Existing approaches often lack rigorous quantitative validation [17].

Several measurement methods have been proposed, including:

- Markup language-based variety estimation [18],

- Iterative sampling techniques [21],

- Entropy and determinism metrics to evaluate communication complexity, where greater variety was correlated with improved effectiveness [22],

- Social network and cluster analysis to assess resilience [23], and

- Multiple Correspondence Analysis (MCA) for capturing organizational complexity [24].

In addition, a subset of studies estimate variety through observed performance rather than structural attributes. Notable examples include:

- Communication-based performance measures, employing determinism metrics to evaluate repeatable patterns in team behavior [22];

- Team performance assessments, using task-based surveys to evaluate an organization's risk-handling capabilities [23];

- Leadership behavior analysis, based on actual behavioral responses to simulated scenarios [26]; and

- Relative performance comparisons, assessing organizational effectiveness across contexts using perception-based rather than absolute metrics [14].

While these performance-based approaches provide practical insights, they often rely on subjective or indirect indicators of variety, which may introduce biases and limit their generalizability. For example, performance outcomes may fail to account for hidden variables or the underlying complexity of the system [15]. Moreover, these approaches remain underrepresented in the literature, where structural and theoretical analyses still dominate.

In summary, although numerous methods for measuring variety have been proposed, no single comprehensive or universally accepted solution has emerged. Quantification remains a persistent challenge in the application of Ashby's Law to complex real-world systems.

Solution

These challenges significantly hinder the practical application of Ashby's Law. Whether considering a human, an AI model, or an organization, we are typically limited to observing external behavior rather than internal mechanisms—unless we are able to "open the box."

Ashby himself emphasized that all real systems can be considered black boxes. He argued that while black boxes mimic the behavior of real objects, in practice, real objects are black boxes: we have always interacted with systems whose internal workings are, to some extent, unknown.

This leads to what Ashby termed the black box identification approach [2], which involves:

- Perturbing the system by applying external disturbances,

- Measuring the system's responses to these perturbations, and

- Inferring the internal variety or capacity from the observed input-outcome relationships.

In most practical scenarios, we are only able to observe the outcomes of a system. These observable outcomes can be used to infer bounds on the system's internal variety—specifically, the extent of variety it must possess or lack in order to exhibit the observed behavior.

We propose such an approach: to treat the system as a black box, observe the probability of successful outcomes to disturbances, and estimate the gap in its internal variety based on that. Let E denote the event that the system gives a correct response to disturbance D, and let R be the regulator's action. In information-theoretic terms, perfect regulation requires H(R|D) = 0[31]. Using our novel information-theoretic estimator, empirical estimates of P(E=1) are used to quantify H(R|D) in bits of information. This quantifies how much disorder the regulator still permits and, conversely, how close the system comes to meeting Ashby's requisite-variety condition.

4. Knowledge Discovery Process

The process of selection may be either more or less spread out in time. In particular, it may take place in discrete stages. What is fundamental quantitatively is that the overall selection achieved cannot be more than the sum (if measured logarithmically) of the separate selections. (Selection is measured by the fall in variety.) 13/17[2]

Ashby's selection in design and regulation via requisite variety are structurally identical: they describe how constraints (or regulation) reduce the variety of possible outcomes from an initial space. in Ashby, constraints, tests, feedback, rules, observations are all selection mechanisms that reduce variety.

Ashby[13/15 [2]] measures the quantity of selection in bits as:

Where

- S is the selection (the amount by which the variety is reduced) or the information gained, i.e., how much the uncertainty has been reduced.,

- Vbefore is the variety before the selection i.e. before a constraint (filter, decision, control action) is applied, and

- Vafter is the variety after the selection i.e after the constraint is applied.

From here on, we treat "variety in bits" as Shannon entropy i.e., using the distribution over possibilities. If alternatives are equiprobable, this reduces to Ashby's counting form.

Thus every time we introduce a rule or a constraint we throw away some of the possibilities and gain information equal to the logarithm of that reduction:

- “What fraction of possibilities remains?” '- that is Vbefore / Vafter

- “How many bits of information does this represent?” '- that is S = log2(Vbefore/Vafter)

Rather than a single act, selection is often a multi-stage process of selection from a range of possibilities[2][4]. It is a process of progressively reducing uncertainty at each stage, where each stage of selection narrows possibilities and increases order (reduces variety). At each stage, the system selects the most appropriate response based on the current state of its knowledge and the disturbances it faces. Mathematically we have:

The total selection is the sum of the partial selections because logarithms turn multiplications of ratios into additions:

Note that reductions add only for nested/refining partitions (each stage refines the previous stage's partition of possibilities). If two stages constrain the same dimension in overlapping ways, we must count the second stage's reduction relative to the first, not from the original variety.

We refer to the multi-stage process of narrowing down and selecting the appropriate response from its set of alternative responses as a Knowledge Discovery Process.

We can say that we've got "it from bit" - a phrase coined by John Wheeler. "It from bit" symbolizes the idea that every item in the physical world has knowledge as an immaterial source and explanation at its core[6].

So far we have used Ashby's notation for disturbances D and regulation responses R. At this point disturbances are denoted by Y and responses by X. The table below aligns Ashby's language of regulation with Shannon's information-theoretic quantities by showing that both describe the same process: the progressive reduction of uncertainty about which action will succeed.

| Ashby term | Symbol | Shannon / Information-theoretic term | Symbol |

|---|---|---|---|

| Disturbance (observed at decision time) | D | Conditioning variable (given side-information) | Y |

| Corerct regulatory response to be selected | R | Random variable to be identified / selected | X |

| Initial lack of requisite knowledge of the regulator about which response works for a given disturbance | H(R|D) | Initial conditional entropy of response given disturbance | H(X | Y) |

| Selection signals (tests, observations, feedback, constraints, rules, partial executions) | Z1, Z2, …, Zk | Auxiliary information sources that reduce uncertainty about which response X is correct (works), given Y | Z1, Z2, …, Zk |

| Residual variety after the i-th selection stage | V(R | D, Z1, …, Zi) | Conditional entropy remaining after i stages | H(X | Y, Z1, …, Zi) |

| Selection achieved at stage i (reduction in variety due to one constraint) | log2 Vbefore / Vafter,i | Conditional mutual information gained at stage i | I(X ; Zi | Y, Z<i) |

| Residual variety after k selection stages | V(R | D, Z1, …, Zk) | Final conditional entropy after all selection signals | H(X | Y, Z1, …, Zk) |

| Total selection achieved (successful adaptation) | log2 Vbefore / Vafter | Total mutual information acquired through all selection stages | I(X ; Z1, …, Zk | Y) |

Let Mt be the system's internal stored structural mapping (coupling) at the start of stage t. In Ashby's terms, M is not a separate object but the regulator's law of action i.e. the learned functional relation by which disturbances are mapped to correct responses[54]. In contrast, the Table of Outcomes (T) is a fixed strucured space of possible outcomes and it specifies what outcome would result from each disturbance'-response pair .

At stage t, the stored structural coupling (mapping) Mt is treated as a parameter (not a random variable). Therefore we do not write entropies or mutual informations “conditioned on Mt”. Instead, we write them as quantities induced by the mapping:

When we need a quantity that also depends on within-stage evidence Z, we use the same convention, e.g. It(X;Z|Y) means IMt(X;Z|Y).

A disturbance Y is observed first and is treated as given; the uncertainty lies in selecting the correct response X. This initial uncertainty is the lack of requisite knowledge, measured as the initial conditional entropy H(X|Y). The system then applies a sequence of constraints, tests, feedback signals, or rules (Z₁…Zₖ), each of which removes some possibilities and therefore reduces the lack of requisite knowledge. In Ashby's terms this is “selection”; in Shannon's terms each stage i contributes conditional mutual information I(X ; Zi | Y, Z<i).

Multi-stage selection

We model each stage outcome as S₁, S₂, …, Sₖ. When mapping one stage of selection with one selection signal Zi we have:

Staged selection is additive only in incremental terms. If stages share information or impose overlapping constraints, summing their marginal 'reductions' overcounts. The correct decomposition credits each stage only for the reduction of residual uncertainty left by previous stages Then he per-stage contributions must be conditional (incremental) to avoid double counting, because the stages may share information or constrain overlapping parts of X. The correct staged accounting is i.e., each stage is credited only for reducing the residual uncertainty left by earlier stages.

As selections accumulate, the remaining uncertainty H(X | Y, Z₁…Zᵢ) shrinks. Successful adaptation occurs when this uncertainty collapses to zero, meaning the system now knows exactly which response works for the given disturbance. The total amount of selection Ashby describes is mathematically identical to the total mutual information accumulated across stages.

Shannon's chain rule for mutual information is:

After k selections we have:

Given the success criterion of determining the correct response X for a given disturbance Y, Ashby's Law of Requisite Variety for a staged selection process is:

Or equivalently, the sum of the bits removed by selection by every stage must at least equal the bits of uncertainty injected by the original range of possibilities or by disturbances.

If there can be multiple acceptable responses for a given disturbance, then the “right object” is not “entropy of X”, but entropy of success i.e. of essential variables E. Thus, we would replace X with an equivalence class of actions or replace the criterion with the entropy of success H(E|Y,Z)=0.

Regulation

Regulation uses staged information to eliminate uncertainty within a stage, where k is the number of selection signals within a stage.

During the stage, staged selection supplies until the choice is effectively determined:

Learning (across stages)

Regulation can use within-stage evidence Z to determine the correct response X for a given disturbance Y during the stage. Learning makes that success persistent by updating the stored structural coupling (mapping) M so future stages start with less uncertainty.

We treat Mt as the system's stored mapping at stage t (a parameter, not a random variable). To avoid conditioning on a function, we write entropies and mutual informations as induced by Mt:

Learning Axiom (Structural Knowledge Accumulation). A system is said to learn (in the structural-coupling sense) if and only if the within-stage evidence stream Zt = (Zt,1, …, Zt,kt) is incorporated into an updated mapping Mt+1 = Update(Mt, Zt), such that for subsequent encounters with the same class of disturbances Y, the induced lack of requisite knowledge decreases:

In words: tomorrow's “prior” uncertainty is smaller because the mapping was updated. (Note: this does not in general imply that I(X;Y) must increase unless additional assumptions are made about the marginal distribution of X; we keep the learning definition anchored to the decrease in Ht(X|Y).)

Posterior-becomes-prior (one-step structuralization). A strong form of learning is when the next stage starts with what the system would otherwise need to discover via the same evidence:

That is: learning stores within-stage discoveries so that future stages require fewer bits of selection to reach successful regulation.

Corollary (Complete Adaptation). Learning is complete (for the task class) when the mapping makes the correct response effectively determined from the disturbance: . (If multiple responses are acceptable, replace X with the equivalence class of acceptable actions or use the goal variable.)

So: regulation uses realized evidence Z to drive uncertainty down within a stage (e.g., it is H(X|Y, Z=z) that collapses after observing a particular z), while learning stores the effect of that evidence by updating M so future stages start with lower Ht(X|Y).

Rework as negative contribution to accumulated coupling (negative learning)

Sometimes what was stored in the mapping later proves incorrect (e.g., failing test, production defect, requirement change), forcing a revision of the mapping: Mt+1 = Revise(Mt, Et). We do not model memory mechanics (what is overwritten, versioned, or kept). We only track the net effect of the mapping change on the system's induced uncertainty/coupling measures.

Coupling and uncertainty measures (induced by the mapping). Let:

Here, Kt is “stored requisite coupling” and Ut is “lack of requisite knowledge” (remaining uncertainty about which response works given the disturbance), both evaluated under the mapping Mt.

Exact knowledge ledger

Define the net change in stored coupling as the actual difference:

Then define gross learned bits and retracted (rework) bits as the positive and negative parts of that change:

Equivalently (as difference measures on the mapping update):

With these definitions, the additive ledger is exact (a tautology, not an assumption):

Therefore:

- If Gt > 0, the mapping update yields net learning (stored coupling increases).

- If Lt > 0, the mapping update yields net rework (stored coupling decreases).

- Mixed stages are allowed: the net is captured by ΔtK.

Uncertainty ledger (parallel view)

We can track lack of requisite knowledge” directly as Ut = Ht(X|Y). Define ΔtU = Ut+1 - Ut, and split it into its positive/negative parts:

This view is always valid. If we want to link it to the coupling ledger via Ut = Ht(X) - Kt, we must be explicit about whether and how the marginal Ht(X) changes as the mapping changes. Absent constraints on the marginal Ht(X), the coupling and uncertainty ledgers are complementary but not interchangeable.

Rework criterion. Rework at stage t is simply: Lt > 0 (equivalently Kt+1 < Kt ).

Rework is measured directly as the drop in the coupling measure induced by the mapping change Mt → Mt+1.

Link to linear information flow, structural coupling (mapping) impact

The evidence stream is linear (stages), but the object edited is the entire structural coupling (mapping) M. The per-stage chain rule quantifies how many bits the stage could contribute via staged selection, while the retraction term quantifies how many previously stored bits must be removed due to replacement. Rework is not a separate phenomenon; it is the negative part of the same additive knowledge accounting.

Residual Variety

In the context of Ashby's Law of Requisite Variety, residual variety refers to the variety that the regulator fails to absorb. In other words, the remaining uncertainty or uncontrolled states in a system after a regulator has applied its available counter-actions. Information-theoretically, this can be understood in two ways:

- The residual variety is the uncertainty H(X|Y) about the regulator's response given the disturbance i.e., the regulator's remaining uncertainty about what action to take given a known disturbance D. It focuses on the input side - how much the regulator doesn't know about the disturbance that is hitting the system.

- The residual variety is the uncertainty H(E) in essential variables, or operationally H(B) when outcomes are binarized into success/failure. It focuses on the outcome side - how much uncertainty persists in what we care about the essential variable.

Both interpretations quantify how much uncertainty is left once the regulator has made its move, because H(X|Y) upper-bounds the achievable reduction in H(E) given the fixed table T. If the regulator perfectly counters every disturbance (full requisite variety), the residual variety in both forms would be zero.

Observability of Residual Variety

Now we focus on one important consideration, which is that E (essential variable) values are observable. These are the outcomes we can measure and care about - system performance, outcome quality, stability measures, etc. Being observable, we can empirically estimate H(E) by collecting data on how E varies given different regulator states R This makes H(E) (residual variety) a measurable quantity in practice.

H(X|Y) presents observability challenges, as disturbances D may not be directly observable i.e. they could be internal system dynamics, environmental factors we can't measure, or complex interactions we can't decompose. Even if some disturbances are observable, the full set D might include hidden or latent factors that we cannot directly measure or quantify. This makes H(X|Y) potentially unobservable or only partially estimable and often theoretical or abstract.

This creates an important asymmetry in cybernetic analysis:

- H(E) is observable and measurable via H(B), allowing us to empirically assess how well the regulator is performing in controlling outcomes.

- H(X|Y) may not be fully observable, making it difficult to quantify the regulator's knowledge of disturbances it's regulating.

This is why H(E) is often the more practically useful measure - it tells us what we can observe about system performance. That is reflected in the literature as well, where work focuses on observable outcomes rather than unobservable disturbances[29,30,35,36,37,38,39,40,41,42,43,44,45].

Defining Knowledge to be Discovered

Ashby's Law of Requisite Variety concerns capacity: the regulator must have a sufficiently large repertoire of possible responses[1]. Heylighen's Law of Requisite Knowledge adds the missing condition for effective regulation: it is not enough to have many possible actions; the regulator must know which action to select for the given disturbance. Otherwise, increased action variety increases the chance of choosing the wrong action, forcing trial-and-error selection[29].

In information-theoretic terms, this “knowing which action to select” is exactly the reduction of uncertainty about the response X once the disturbance Y is observed. That uncertainty is the conditional entropy H(X|Y).

Knowledge To Be Discovered. We define Knowledge To Be Discovered as the conditional entropy H(X|Y): the expected uncertainty about which response(s) will achieve success, given that the disturbance is known. Operationally, it is the amount of selection (in bits) that must still be supplied in order to determine a successful response.

Thus, Knowledge To Be Discovered is the precise difference between the action variety available and the coupling/selectivity actually achieved:

Here:

- H(X) is the regulator's available response variety (the size/diversity of the action repertoire), or the prior knowledge.

- I(X;Y) is the regulator's requisite knowledge: how much the disturbance actually informs the choice of action, or the required knowledge.

- H(X|Y) is the remaining uncertainty after the disturbance is known, i.e. the lack of requisite knowledge, or the knowledge to be discovered.

Requisite knowledge is not an independent thing but the total selectivity actually available at decision time makes H(X|Y) small which can be stored structure M or online evidence Z:

- Offline (stored) coupling: learning updates the mapping M so that future stages start with higher It(X;Y) and therefore lower Ht(X|Y).

- Online (within-stage) selection: evidence Z (tests, observations, constraints) supplies additional conditional information I(X;Z|Y), driving H(X|Y,Z) toward 0 during regulation.

In the ideal case of complete adaptation for the task class, the regulator's selectivity is perfect: H(X|Y) = 0 (the response is effectively determined by the disturbance). If multiple responses are acceptable, we will replace X with the equivalence class of acceptable actions or measure success directly via the goal variable.

This yields the intended conceptual pairing:

- Residual variety H(E): what remains uncontrolled in outcomes.

- Knowledge To Be Discovered H(X|Y): what remains undetermined about which action succeeds, given the disturbance.

This framing suggests that reducing "knowledge to be discovered" is a pathway to reducing "residual variety" - which is exactly the cybernetic insight that better information about disturbances enables better control. It emphasizes the constructive, learning-oriented aspect of cybernetic systems rather than just their current limitations.

5. Quantifying Knowledge To Be Discovered

How is the desired regulator to be brought into being? With whatever variety the components were initially available, and with whatever variety the designs (i.e. input values) might have varied from the final appropriate form, the maker Q acted in relation to the goal so as to achieve it. He therefore acted as a regulator. Thus the making of a machine of desired properties (in the sense of getting it rather than one with undesired properties) is an act of regulation[2].

We estimate the knowledge to be discovered operationally from externally observable execution counts — anchored capacity N, outcomes O and their corresponding values E, and (optionally) wasted commitments W, without observing disturbances Y or the internal selection process directly. We focus on the case where regulation is eventually successful, i.e. the knowledge to be discovered eventually becomes 0.

Clarifying “outcomes” vs. “activity” (stage-closure). In this model an outcome is defined operationally as a stage-closing commitment: an externally visible event that terminates the search for one disturbance-response stage. It is not “any produced artifact” or “any activity.” In software it might be a merge/accept/release; in other domains it might be an approved artifact, a published paragraph, a signed decision, etc. The theory depends only on having a consistent, externally observable stage-close event.

Clarifying “rework” (in-model). Rework is any externally observable behavior in which previously produced artifacts are revised, undone, deleted, replaced, or corrected due to new evidence (e.g. failing tests, defects, requirement changes, reversals, rollbacks). Rework is observable through external change signals such as deletions, reversions, churn, reopened items, or corrective follow-up actions. Cybernetically: rework is capacity spent on revising prior commitments, which reduces marginal stage-closure per unit capacity because the system revisits and repairs earlier choices instead of closing new stages.

Here are the assumptions our Model is based on:

- Disturbances Y are observed at decision time; uncertainty lies in selecting the appropriate response X.

- A search space Ω is the entire set of all possible configurations, solutions, or states within a given problem domain, encompassing both valid solutions and potential paths to reach them.

- For each disturbance y, the regulator faces a finite response search space Ωy with uniform prior (maximum entropy).

- For the knowledge discovery process there is some true value of the knowledge to be discovered .

- A selection is a binary decision in a dichotomous search in a search space Ωy.

- The knowledge discovery process is a stream of such binary selections made by a regulator.

- Each operational binary selection corresponds to one unit of information gain from the process Z1...Zk.

- We assume a single shared execution channel with finite capacity per period N, where each unit of capacity is consumed by either one binary selection or one produced outcome (no idle time).

- At n regular time intervals we monitor for changes in the outcomes O. An interval can contain either an outcome or none at all. If the interval contains no outcome we assume there was a selection made by the regulator.

- Let Bt ∈ {0,1} be a binary event-type indicator on the shared execution channel, defined over n regular time intervals t = 1...n: This variable is used only to segment and count events; it is not a correctness/success indicator.

- Each produced outcome may be modeled as the termination of a dichotomous search in Ωy; in an optimal such search, each yes/no decision halves the remaining candidate set, yielding one bit per stage[9]. Thus the latent expected number of binary selections per outcome equals the information-theoretic search depth implied by the underlying uncertainty H(X|Y)Ω (up to the standard +1 Shannon bound). Operationally, however, we observe a selection-equivalent depth from execution counts, in which any wasted outcome (rework) is treated as one additional selection-equivalent step because it consumes one unit of the shared channel without adding net progress.

- Disturbances are aggregated at the granularity of outcomes; H(X|Y) is therefore defined relative to the chosen unit of outcome.

- At regular time intervals (e.g. day or week), we estimate the average number of selections per outcome by dividing the total selections by the total outcomes during the interval, using the Shannon bound that the minimum expected number of binary questions to identify X lies between H(X) and H(X)+1[5].

- Non-retroactive windowing. is computed per window without rewriting earlier windows when later rework is discovered. Once a stage-closing commitment occurs, it remains counted as “closed” in that earlier window. If rework happens later, it consumes capacity in later windows, reducing later stage closures and increasing the later measured missing information.

The key idea is that the regulator's capacity to respond correctly is not static — it can change over time as the system learns and adapts. We model this using a time series of pairs (yi, xi), where yi is the i-th disturbance and xi is the i-th response. We then model the dynamics over time using a time series of events (selections and realized outcomes) {e}= {e1 , . . . , ej}, where ei is the i-th event of the disturbance-response (yi, xi) pair. The event-type indicator forms a binary time series {Bt}, where 0 denotes “one binary selection” and 1 denotes “one realized outcome”:

We define an outcome rate (throughput) over the shared execution channel:

Where:

- θ({Bt}) is the fraction of time intervals that contain realized outcomes (stage-closing commitments).

- n is the total number of observed time intervals on the shared execution channel.

- Bt indicates whether interval t was used to emit an outcome (1) or to perform a binary selection (0).

The total number of realized outcomes is , and the total number of selections is . Thus the observed average number of selections per realized outcome is:

This models the effectiveness of a regulator: the more 1s, the better they are at selecting the right response to a given disturbance. It also presents a dynamic improvement — the sequence of 0s and 1s in E can shift over time as the regulator improves (more 1s, fewer 0s).

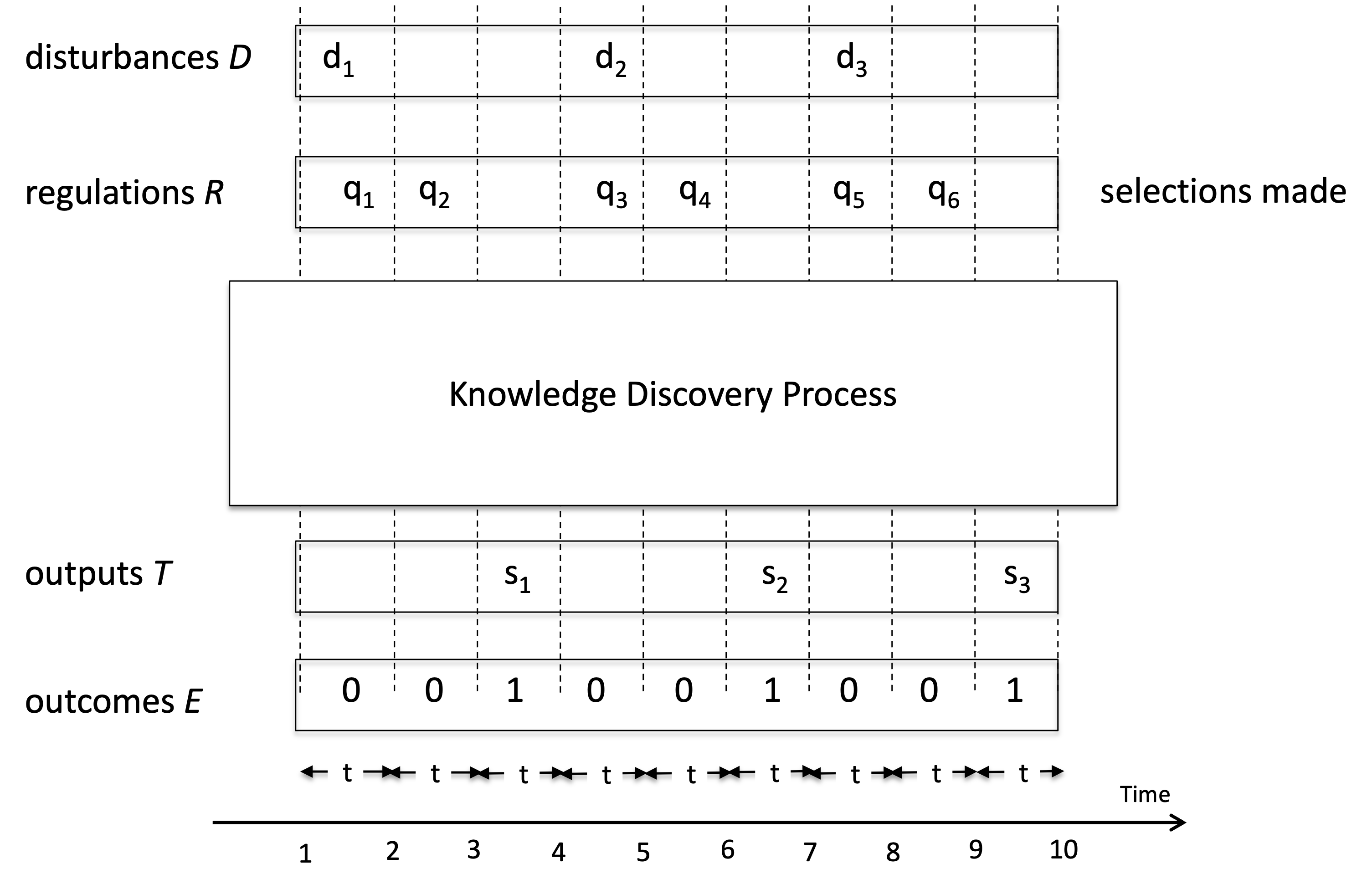

We illustrate the knowledge discovery process in Fig. 1.

Fig.1 A Knowledge Discovery Process. A regulator faces a sequence of disturbances Y and selects responses X over discrete time intervals t. The total available time T is divided into n unit intervals, each of which is used either to perform one optimal binary selection (one internal node traversal in a decision tree) or to emit one realized outcome in O. Three realized outcomes o1, o2, and o3 are produced. For disturbance y1, the correct response is already determined by the stored structural coupling between X and Y (i.e., ), so outcome o1 is emitted with zero selections. For disturbance y2, the regulator must search within the candidate response set , traversing four binary decision nodes (z1z2z3z4) before identifying the correct response and emitting o2. For disturbance y3, the candidate set requires two binary selections (z5z6) before outcome o3 is produced. Accordingly, the per-disturbance decision depths are: , , and . The expected number of selections per outcome is therefore E(k) = 2, which operationally estimates the latent conditional entropy H(X|Y) up to the standard +1 Shannon bound. This expected decision depth quantifies the Knowledge To Be Discovered required to produce an outcome.

We now generalize the process illustrated in Fig. 1. A regulator faces a sequence of disturbances Y and produces outcomes {o} = {o1, o2, o3, ..., on} by selecting responses X. Selections and outcomes share a single execution channel: each time unit is one atomic action, either “ask one binary question” or “emit one outcome.”

To produce a time series of the binary event-type indicator B we put "1" for each time interval when an outcome was produced and "0" for each time interval when a selection was made.

Let denote the complete time-ordered sequence of events (selections and realized outcomes) over a fixed period of length n. Suppose the sequence contains S realized outcomes, where . Let these outcomes be denoted o1...oS.

We partition {e} into S consecutive and non-overlapping subsequences where i ranges from 1 to S. Each subsequence wi corresponds to a stage of a multi-stage selection process for responding to one disturbance yi, and consists of one outcome oi and zero or more selections {zi} preceding it. S counts stage-closing commitments, not mere activity or attempted outcomes.

For each disturbance realization , there exists a finite candidate set of admissible responses X, with prior distribution .

We assume optimal binary selection: the selection process Z1...Zk is implemented by the regulator through an optimal sequence of binary selections for identifying the correct response given .

Then the expected number of binary selections required to identify X satisfies the bound: where k is the number of binary selections preceding an outcome.

Collecting these values yields a sequence of conditional entropies

The empirical per-outcome average conditional entropy of the overall knowledge discovery process is estimated (under stationarity/ergodicity assumptions) as:

(1)

Averaging over disturbances yields The conditional entropy H(X|Y) lower-bounds the expected optimal binary search depth (up to +1 per disturbance). with equality iff is uniform on Ωy. This corresponds to the average number of selections required per outcome.

We define the total number of selections made for the sequence of conditional entropies {H(X|Y=yi)} in the form

(2)

Hence the average conditional missing information H equals the average number of selections made to produce one outcome.

(3)

Under optimal binary selection, the average expected selections per outcome satisfies:

If the minimum outcome duration is one unit of time, equal to the time it takes to make one selection, then each action occupies one unit of time t. Hence the sum of S and Q equals the length n of the time series:

The total available time T is therefore:

We define r as the outcome rate of the channel S, measured in outcomes per second, computed from outcome duration t:

Then:

We now drop usage of n and instead use N as maximum action capacity (selections + outcomes) per period.

Consequently, over any observation window with maximum action capacity and observed number of outcomes , the number of selections is:

which provides an operational estimator of the expected search depth required for regulation

Rework, waste, and throughput reduction under fixed capacity

Under fixed execution capacity, rework reduces effective throughput because some capacity is spent revising prior commitments rather than closing new stages. In an information-theoretic view, this is the case where an attempted “learning update” produces negative or zero net retained information over the chosen ledger horizon:

Key operational distinction. The execution channel observes attempted stage-closes (commitment emissions), but the model’s “realized outcomes” are net stage closures that survive the ledger horizon. Let:

- N (or Nmax) be anchored action capacity in the window (selections + outcome-emissions).

- S be the number of outcome-emissions (stage-closing commitment attempts) observed in the window.

- W be the number of wasted outcome-emissions in that same window: commitments whose retained contribution over the ledger horizon is ≤ 0 (e.g., reverted/rolled back/fully undone).

- Then Snet = S - W is the number of realized (net) outcomes attributable to that window under the ledger rule.

Because wasted outcomes still consumed capacity, waste must be carved out of observed outcomes, not out of “idle time.” This forces the bookkeeping identities (under the single-channel / no-idle assumption):

- Net throughput decreases:

- Net non-outcome debits increase (selection-equivalent depth):

Operational missing information with rework. Rework is not a hidden variable in this model: it appears immediately as reduced (net stage closures) under fixed capacity. The operational missing information is the average non-outcome debits per net stage closure:

That identity is intentional: under our “anchored capacity + stage-closure” model, rework shows up as reduced , so it is already inside .

Define the baseline “no-rework” missing information holding gross S fixed:

Then the extra missing information induced by rework is:

So W increases operational missing information by an amount that is strictly increasing in W and convex as W→S.

If our counterfactual “no rework” world also changes how many stages get closed in the window (often true), then we should present as a decomposition conditional on fixed S rather than as a universal causal claim.

Implicit vs. explicit rework measurement. Rework influences implicitly via the ratio above because bounded capacity crowds out stage closures. Optionally, rework can also be tracked explicitly as a diagnostic decomposition of non-closing work: , with a simple diagnostic fraction (one example): (or equivalently divided by ), which explains why missing information increased in a window by quantifying how much capacity was spent undoing/correcting prior work.

Non-retroactive interpretation (again, operationally). We do not rewrite past window measurements when later corrections are discovered. Rework does not “change the past”; it reduces future efficiency because future capacity must be spent correcting past commitments. Thus rework appears as a measurable reduction in future stage-closure rate and a corresponding rise in in the windows where rework consumes capacity.

Then, we can state the Knowledge To Be Discovered theorem:

Consider a regulator operating under the following conditions:

- There is a single shared execution channel with no idle time, so that each discrete time unit is used either to:

- perform one binary selection (one yes/no question that halves the remaining candidate set), or

- emit one externally visible outcome.

- For each disturbance realization , there exists a finite candidate set of admissible responses , with prior distribution .

- The regulator applies an optimal binary questioning strategy for identifying the correct response given .

Then the expected number of binary selections required to identify satisfies the Shannon bound:

where is the number of binary selections preceding an outcome. Averaging over disturbances yields:

Consequently, over any fully-utilized observation window of length time units on the shared execution channel, let:

- be the number of outcome-emissions (stage-closing commitments) in the window.

- be the number of binary selections in the window.

- be the number of wasted outcome-emissions (commitments that do not count as realized outcomes), with . The number of realized outcomes is therefore .

Since each time unit is used either for one selection or one outcome-emission (no idle time), the channel identity is:

Define the empirical mean number of selections per realized outcome:

This ratio is directly observable from execution counts and provides an operational estimator of the expected search depth.

Under the stated assumptions, this estimator satisfies:

(4)

and therefore bounds the conditional entropy — the knowledge to be discovered — up to an additive constant.

The result is operational: it links an abstract information-theoretic quantity to directly observable execution counts , without assuming access to the true distribution. It provides an operational upper bound on conditional entropy under idealized assumptions (optimal binary selection, single shared channel, no idle capacity). It does not claim to recover the true entropy exactly in arbitrary systems.

If there is no knowledge to be discovered, i.e. there is no need to make selections at all, then S equals N and H(X|Y) is zero. This is not redefining entropy — it is measuring it operationally.

We can rearange the bounds to be:

Conservative Estimator (Operational Upper Bound)

In the idealized case where the posterior over Ωy is uniform and |Ωy| is a power of two, the optimal binary decision tree is perfectly balanced and the bound is tight: E[k] = H(X|Y).

Operationally, over a time window with maximum action rate N and observed outcome rate S, we define the estimator:

(5)

We call Ĥ(X|Y) a conservative estimator of the Knowledge To Be Discovered if: (i) it is computable solely from observables (N, S), and (ii) it upper-bounds the latent conditional entropy H(X|Y).

Formally, Ĥ(X|Y) is an operational upper bound on the true conditional entropy, with a guaranteed approximation error of less than one bit:

This bound follows from the optimality of binary decision trees (or prefix codes): the expected decision depth cannot be smaller than the conditional entropy H(X|Y), and integer decision depths imply an excess of at most one bit.

Interpretation. Ĥ(X|Y) measures the empirically observed expected decision depth per outcome. It is therefore a conservative (never-underestimating) operational proxy for the true Knowledge To Be Discovered H(X|Y). In the idealized uniform, power-of-two case it is exact; otherwise it overestimates by less than one bit. Whatever the true H(X|Y) is, it cannot exceed what is implied by the observed execution capacity.

6. Knowledge-Discovery Efficiency (KEDE) Metric

Now we generalize the Knowledge-Discovery Efficiency (KEDE) - scalar metric that quantifies how efficiently a system closes the gap between the variety demanded by its environment and the variety embodied in its prior knowledge[28].

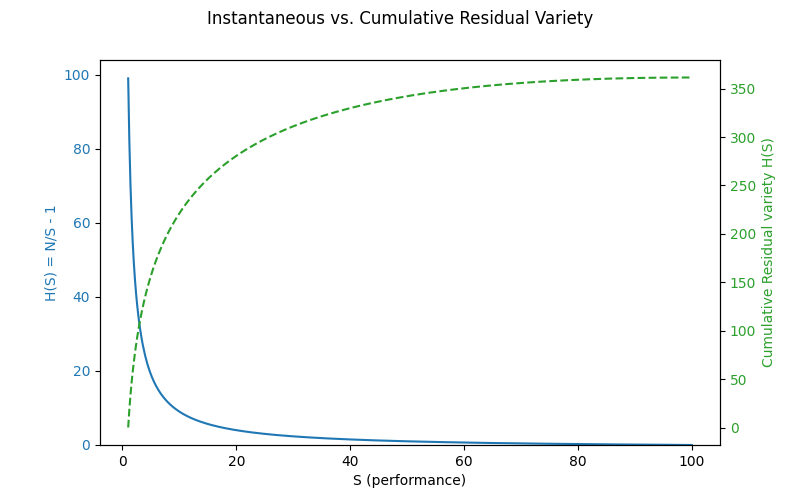

We rearrange the formula (5) and insted of we use for notation simplicity. and get the formula for Knowledge-Discovery Efficiency (KEDE) metric[28]:

(6)

KEDE is a scalar metric that quantifies how efficiently a system closes the gap between the variety demanded by its environment and the variety embodied in its prior knowledge[28]. KEDE is an acronym for KnowledgE Discovery Efficiency. It is pronounced [ki:d].

Efficiency means the smaller the average number of selections made per outcome the better. In other words - the less knowledge to be discovered per outcome the more efficient the knowledge discovery process is.

KEDE has the properties:

- It is a function of the missing information H

- Its maximum value corresponds to H equals zero i.e. there is no need to make selections, all knowledge is already discovered.

- Its minimum value corresponds to H equals Infinity i.e. we have no knowledge to start with.

- It is continuous in the closed interval of [0,1]. This makes it very useful to be used as a percentage. This is because we need to be able to rank knowledge discovery processes by efficiency. The best ranked knowledge discovery process will have 100% and the worst 0%. That is practical and people are used to having such a scale.

What does KEDE measure?

- Regulation consumes execution capacity to cope with missing knowledge.

- Knowledge discovery converts that consumed capacity into persistent internal variety, reducing future consumtion of the execution capacity.

- KEDE measures the efficiency of this conversion: how much execution capacity is spent on discovery versus production, under a successful-adaptation regime.

KEDE effectively converts the knowledge to be discovered H(X|Y), which can range from 0 to infinity, into a bounded scale between 0 and 1.

Due to its general definition KEDE can be used for comparisons between organizations in different contexts. For instance to compare hospitals with software development companies! That is possible as long as KEDE calculation is defined properly for each context. In what follows we will define KEDE calculation for the case of knowledge workers who produce textual content in general and computer source code in particular.

Anchoring KEDE to Natural Constraints

In our model, N is always the theoretical maximum action rate (selections + outcomes) in an unconstrained environment, and S is the observed outcome rate under specific conditions over a given interval.

A key question is how to assign a natural constraint to N. That is, what constitutes an appropriate reference value for the maximum action rate (selections + outcomes)?

We may turn to physics for an instructive analogy. A quantum (plural: quanta) represents the smallest discrete unit of a physical phenomenon. For instance, a quantum of light is a photon, and a quantum of electricity is an electron. In this context, the speed of light in a vacuum serves as a fundamental upper bound for N. However, identifying an analogous natural constraint for human activity—particularly knowledge work—presents greater challenges.

Consider the example of typing. Here, the quantum can reasonably be defined as a symbol, since it is the smallest discrete unit of text. A symbol may be a letter, number, punctuation mark, or whitespace character. To determine the appropriate bin width Δt, we refer to empirical data on the minimum time required to produce a single symbol. Typing speed has been subject to considerable research. One of the metrics used for analyzing typing speed is inter-key interval (IKI), which is the difference in timestamps between two keypress events. We see that IKI is defined equal to the symbol duration time t. Hence we can use the research of IKI to find the symbol duration time t. Studies have reported an average IKI of 0.238 seconds [26], yielding a maximum human typing rate of approximately N=1/t=1/0,238=4.2 symbols per second

A similar approach can be applied to tasks such as furniture assembly. In this case, a plausible quantum is a single screw tightened, since it represents a minimal, repeatable unit of outcome. We then identify Δt as the average time required to tighten one screw. Empirical studies report that this task typically takes between 5 and 10 seconds[34]. Using the upper bound, we estimate the maximum screw-tightening rate as N=1/t=1/10=0.1 screws per second.

This methodology offers a principled way to estimate N using domain-specific quanta and empirically grounded time durations, enabling the application of our model to a broad range of human tasks.

The next question concerns the appropriate definition of outcome for measuring S and N.

Both N and S can always be discretized—or “binned”—in a way that preserves the total information rate, regardless of whether the outcome arises from natural processes, human behavior, or machines. By choosing a bin width Δt small enough (e.g., milliseconds), the range of possible tangible outcomes within each bin shrinks dramatically. This reduced range leads to less uncertainty in each bin, which compensates for the smaller time interval. Yet the ratio

remains an accurate measure of information rate.

As Δt becomes smaller, the measurements of S and N become more precise, as they reflect outcome over finer time intervals. But how small should Δt be? This dilemma is resolved by considering the granularity of outcomes associated with the outcome. The set E of outcomes can be thought of as the effects of the regulation process — the resulting states after the regulator responds to disturbances. In our model E is a sequence of {0,1}, where 0 = wrong outcome(failure to regulate) and 1 = correct outcome(successful selection). So the presence of a concrete outcome leads to a natural binning of the outcomes, It also enables a clear distinction between signal (the entropy associated with producing the outcome) and noise (the residual variability unrelated to success or failure).

For example, two distinct symbols typed (e.g., ‘a' vs. ‘b') are clearly different outcomes. However, if one symbol is typed in 91 milliseconds and another in 92 milliseconds, this minute variation is inconsequential to the outcome. Such timing fluctuations are typically unintentional, irrelevant to task performance, and should not be considered part of the outcome. In practical terms, if the theoretical upper bound N is known—for instance, 4.2 symbols per second as derived from human typing speed, and the observed rate is S=1 symbol per second, then time should be partitioned into one-second bins. Each bin then yields a single outcome: either 1 (a symbol was successfully typed) or 0 (no symbol typed or incorrect input).

This binning principle generalizes beyond typing. Whether analyzing foot strikes in trail running (where negligible spatial change occurs over milliseconds) or the discrete moves in solving a Rubik's cube (where each turn resolves multiple potential states into a single action), binning ensures that no intermediate state need be modeled explicitly.

7. Applications

The knowledge-centric perspective builds on Ashby's Law of Requisite Variety by emphasizing that successful outcomes depend not only on a system's range of possible responses, but also on its ability to select the right response for each disturbance. This requires internal “system knowledge” that maps disturbances to appropriate actions. As Francis Heylighen proposed in his “Law of Requisite Knowledge,” effective regulation demands more than variety—it demands informed selection[29]. This knowledge-centric lens provides a foundation for analyzing how systems—biological, technical, or organizational—achieve control not just through options, but through understanding. The model we present operationalizes this perspective by estimating the informational requirements a system must satisfy to achieve its observed level of regulatory performance.

In what follows, we apply this knowledge-centric perspective to a range of domains, including motor tasks and manual assembly, industrial assembly lines, software development processes, speed of light in a medium, intelligence testing and sports performance. In each case, the model enables us to estimate, in bits of information, the amount of knowledge a system must lack to produce its observed level of performance. By quantifying the knowledge to be discovered H(X|Y), we assess how much uncertainty was there in the system's ability to select appropriate responses. This allows us to compare systems not by tangible outcomes, but by the hidden knowledge structures required to achieve them, offering a unified lens for analyzing adaptation, skill, and control across diverse contexts.

Tightening screws

We can apply our model to motor tasks such as furniture assembly. In this context, a natural unit of outcome — or “quantum” — is the tightening of a single screw.

Skilled workers engaged in manual assembly tasks can typically insert and tighten standard screws at a rate of 6'-12 screws per minute under optimal, repetitive conditions — such as those found in furniture construction or industrial assembly lines. In contrast, automated screw-tightening machines can achieve significantly higher rates, often between 30 and 60 screws per minute [34] More complex manual tasks, such as high-torque applications involving ratchets or Allen keys, typically reduce the rate to 2'-4 screws per minute due to the increased effort and precision required. In surgical or medical contexts, such as orthopedic screw insertion, accuracy and the avoidance of overtightening are paramount; here, rates often fall to 1'-2 screws per minute, or approximately one screw every 30'-60 seconds [46].

| Context | Typical Rate (screws/minute) | Notes |

|---|---|---|

| Automated (machine) | 30'-60 | For comparison, not manual |

| Fast, repetitive tasks | 6'-12 | Assembly line, minimal torque required |

| High-torque/manual | 2'-4 | Metalwork, ratchets, Allen keys |

| Surgical/precision | 1'-2 | Orthopedic, high accuracy, low speed |

The key observation is that rates decrease as torque, task complexity, or required precision increases. If we take the machine rate as the maximum possible outcome N and the observed human rate as S, we can estimate the average number of bits of information H(X|Y) that the human operator must process per action.

This implies that the human must absorb approximately 4 bits of information, on average, to tighten a single screw under typical conditions.

The rate at which a person tightens screws depends on various factors, including:

- Screw type and size

- Material being fastened

- Required torque

- Tool used (screwdriver, ratchet, etc.)

- Operator skill and fatigue

This interpretation aligns with existing research, which suggests that task difficulty directly influences the amount of information a task imparts [47, 48]. When difficulty is appropriately matched to the individual's skill level, the task yields maximal informational value [49], and the time required reflects the interaction between task complexity and the individual's regulatory capacity [50].

Using our model, we transform a sequence of real-world actions in furniture assembly into a granular, time-based measure of regulatory capacity. This enables us to quantify — in bits — how much variety the individual must absorb in order to successfully complete the task.

Typing the longest English word

Let's use an example scenario to see Ashby's law applied to human cognition and knowledge work.

For that we'll have myself executing the task of typing on a keyboard the word “Honorificabilitudinitatibus”. It means “the state of being able to achieve honours” and is mentioned by Costard in Act V, Scene I of William Shakespeare's “Love's Labour's Lost”. With its 27 letters “Honorificabilitudinitatibus” is the longest word in the English language featuring only alternating consonants and vowels.

The way I will execute this task is to go to the "play text" or "script" of “Love's Labour's Lost”, look up the word and type it down. The manual part of the task is to type 27 letters. The knowledge part of the task is to know which are those 27 letters.

In order to track the knowledge discovery process I will put "1" for each time interval when I have a letter typed and "0" for each time interval when I don't know what letter to type.

I start by taking a good look at the word “Honorificabilitudinitatibus” in the script of “Love's Labours' Lost”. That takes me two time intervals. Then I type the first letters “H”, “o”, and “n”.I continue typing letter after letter: “o”, “r”. At this point I cannot recall the next letter. What should I do? I am missing information so I go and open up the script of “Love's Labours Lost” and I look up the word again. Now I know what the next letter to type is but acquiring that information took me one time interval. This time I have remembered more letters so I am able to type “i”,”f”,”i”,”c”,”a”,”b”,”i”. Then again I cannot continue because I have forgotten what were the next letters of the word, so I have to look it up again.in the script. That takes two more time intervals. Now I can continue my typing of “l”,”i”,”t”. At this point I stop again because I am not sure what were the next letters to type, so I have to think about it. That takes one time interval. I continue my typing with “u”,”d”,”i”. Then I stop again because I have again forgotten what were the next letters to type, so I have to look it up again in the script of “Love's Labours Lost”. That takes two more time intervals. Now I know what the next letter to type is so I can continue typing “n”,”i”.At this point I cannot recall the next letter. so I have to look it up again in the script. That takes two more time intervals. After I know what the next letter to type is I can continue typing “t”,”a”,”t”,”i”,”b”,”u”,”s”. Eventually I am done!

At the end of the exercise I have the word “Honorificabilitudinitatibus” typed and along with it a sequence of zeros and ones.

|

|

|

H | o | n | o | r |

|

i | f | i | c | a | b | i |

|

|

l | i | t |

|

u | d | i |

|

|

n | i |

|

|

t | a | t | i | b | u | s |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

In the table we have separated the manual work of typing from the knowledge work of thinking about what to type.

The first row of the table shows the knowledge I manually transformed into tangible outcome - in this case the longest English word. The second row of the table shows the way I discovered that knowledge. There is a "0" for each time interval when I was missing information about what to type next. There is "1" for each time interval when I had prior knowledge about what to type next. Each "0" represents a selection I needed to ask in order to acquire the missing information about what letter to type next. Each "1" represents prior knowledge.

In the exercise above we witnessed the discovery and transformation of invisible knowledge into visible tangible outcome.

KEDE calculation

We can calculate the KEDE for this sequence of outcomes.

We can also calculate the knowledge discovered H(X|Y) in bits of information.

We've turned a real-world sequence of action and hesitation into a fine-grained, time-based measurement of regulatory capacity — effectively measuring how much variety I needed to absorb with external help i.e. my knowledge discovered.

Measuring software development

In order to use the KEDE formula (6) in practice we need to know both S and N. We can count the actual number of symbols of source code contributed straight from the source code files. For N we want to use some naturally constrained value.

N is the maximum number of symbols that could be contributed for a time interval by a single human being.

In the below formula for N we want to use some naturally constrained value:

To achieve this, the following estimation is performed. We pick T = 8 hours of work because that is the standard length of a work day for a software developer.

To calculate the value of r we need to pick the symbol duration t.

The value of the symbol duration time t is determined by two natural constraints:

- the maximum typing speed of human beings

- the capacity of the cognitive control of the human brain

Typing speed has been subject to considerable research. One of the metrics used for analyzing typing speed is inter-key interval (IKI), which is the difference in timestamps between two keypress events. We see that IKI is defined equal to the symbol duration time t. Hence we can use the research of IKI to find the symbol duration time t. It was found that the average IKI is 0.238s [26]. There are many factors that affect IKI [6]. It was also found that proficient typing is dependent on the ability to view characters in advance of the one currently being typed. The median IKI was 0.101s for typing with unlimited preview and for typing with 8 characters visible to the right of the to-be-typed character but was 0.446s with only 1 character visible prior to each keystroke [7]. Another well-documented finding is that familiar, meaningful material is typed faster than unfamiliar, nonsense material[8]. Another finding that may account for some of the IKI variability is what may be called the “word initiation effect”. If words are stored in memory as integral units, one may expect the latency of the first keystroke in the word to reflect the time required to retrieve the word from memory[55].

Cognitive control, also known as executive function, is a higher-level cognitive process that involves the ability to control and manage other cognitive processes that permit selection and prioritization of information processing in different cognitive domains to reach the capacity-limited conscious mind. Cognitive control coordinates thoughts and actions under uncertainty. It's like the "conductor" of the cognitive processes, orchestrating and managing how they work together. Information theory has been applied to cognitive control by studying the capacity of cognitive control in terms of the amount of information that can be processed or manipulated at any given time. Researchers found that the capacity of cognitive control is approximately 3 to 4 bits per second[32][33], That means cognitive control as a higher-level function has a remarkably low capacity.

Based on the above research we get:

- Maximum typing speed of human beings to be r=1/t=1/0,238=4.2 symbols per second

- Capacity of the cognitive control of the human brain to be approximately 3 to 4 bits per second. Since we assume one question equals one bit of information we get 3 to 4 questions per second.

- Asking questions is an effortful task and humans cannot type at the same time. If there was a symbol NOT typed then there was a question asked. That means the question rate equals the symbol rate, as explained here.

In order to get a round value of maximum symbol rate N of 100 000 symbols per 8 hours of work we pick symbol duration time t to be 0.288 seconds. That is a bit larger than what the IKI research found but makes sense when we think of 8 hours of typing. Having t of 0.288 seconds makes a symbol rate r of 3.47 symbols per second. That is between 3 and 4 and matches the capacity of the cognitive control of the human brain.

We define CPH as the maximum rate of characters that could be contributed per hour. Since r is 3.47 symbols per second we get CPH of 12 500 symbols per hour. We substitute T = h and r=CPH and the formula for N becomes:

where h is the number of working hours in a day and CPH is the maximum number of characters that could be contributed per hour. We define h to be eight hours and get N to be 100 000 symbols per eight hours of work.

Total working time consist of four components:

- Time spent typing (coding)

- Time spent figuring out WHAT to develop

- Time spent figuring out HOW to code the WHAT

- Time doing something else (NW)